Originally published at: I tried this AI program to fix a scratched-up, blurry photo of my old-country ancestors | Boing Boing

…

Yikes! It makes them look like you descended from a long line of crazed serial killers.

Réalisant mon espoir, je me lance vers la gloire, okay!

Eyes seem like they cause a lot of trouble for AI models. I’d guess it’s a combination of the fact that human brains pay a lot of attention to the details of eyes, so we notice anything “off” about them right away, and the fact that these machine learning models don’t have an underlying framework for the physiology beneath the pixels. So they don’t know, for example, that two eyes should look the same direction and everyone looks just a bit like

Fun Fact: My ex-MIL was Polish, but born in Belarus.

I do have some old pics of my native ancestors of the Navarre family. Maybe I should give it a go!

Old photos are blurry precisely because in the past, people had weird eyes. No one could stand the way their eyes looked in photos, so the cameras and film were constructed to obscure them.

The increased use of AI “image restoration” is causing some, ah, interesting issues that are only going to increase. This one in particular will result in users having mistaken beliefs in what people in old photographs actually looked like, with biases in AI coming into play. (A lot of people’s ancestors will suddenly get a lot whiter.) Recently there was a whole conspiracy theory built around people using neural network-based “image sharpening” tools that created artifacts that people believed showed real features in the image. Cops are increasingly going to be using these kinds of tools to “reveal” who the suspects are in blurry photos, and then use that completely generated image as “evidence.”

The after pictures look too good. Skin like airbrushed magazine models.

(But the eyes … [shudder])

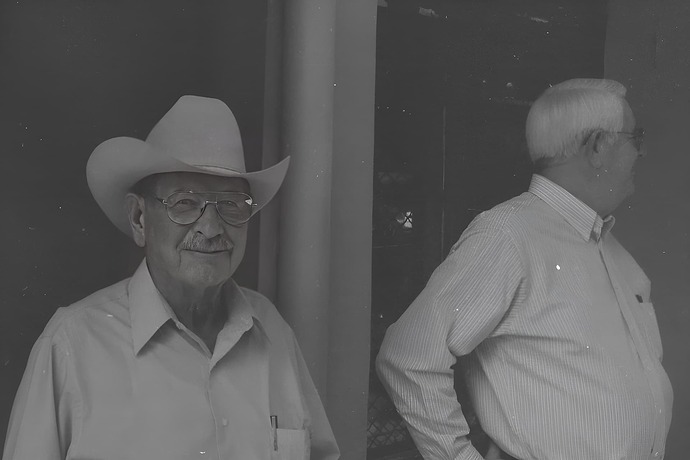

I find value in this maybe not so much as a old-timey photo restorer, but as a very aggressive denoiser, which might create an interesting look in some cases. Here’s a before and after of a photo I took of a friend with weird makeup:

Here’s another example, before and after, of a photo that didn’t need a lot of restoration, but was very grainy because it was taken with high ASA film. Kind of cool results. I can image doing this on individual frames of video for an interesting effect:

They made everyone look prettier, younger, and less grim than I interpret from teh originals.

You don’t need AI to introduce bias. Decades ago, before AI, people created enhanced close-up images of the Dealey Plaza grassy knoll, supposedly showing a 2nd gunman.

Or just people with weak eye muscles.

Great. Now the scratches look sharper and more distinct. I though this program was designed to remove scratches?

(couldn’t be simpler, really. Take a picture. Print it on to photo paper. Scan It. Now physically damage the photo. Artificially age it. Train the model to reconstruct the scratch less photo.)

I personally use this with great success of videos and photos.

Imagine a bad piece of taxidermy, where the most impressive aspect is that the artist procured the finest quality glass eyes, and put them in roughly the correct position…,

This is impressive. The enhanced images really do look like they match the originals.

The main problem are the eyes, which look unnatural.

Some of the issue with eyes may be from the use of blue-sensitive orthochromatic photo film which was typical 100 years ago. Colors that are close to blue will be bleached out. This is why the irises of actors from the 1920’s look so strange. The AI engine may be struggling with that if it has been trained on pictures taken with panchromatic film.